- Indico style

- Indico style - inline minutes

- Indico style - numbered

- Indico style - numbered + minutes

- Indico Weeks View

NEST Conference 2025

→

Europe/Berlin

Virtual

Virtual

Description

The NEST Initiative invites everyone interested in Neural Simulation Technology and the NEST Simulator to the annual virtual NEST Conference. The NEST Conference provides an opportunity for the NEST Community to meet, exchange success stories, swap advice, learn about current developments in and around NEST spiking network simulation and its application. Take the opportunity to advance your skills in using NEST at our hands-on workshops! We particularly encourage young scientists to participate in the conference!

The Virtual NEST Conference 2025

The NEST Conference 2025 was held as a virtual event on

Tuesday/Wednesday 17/18 June.

Keynote Speakers

|

|

|

Marja-Leena Linne Tampere University, Finland |

Daniela Gandolfi Università degli Studi di Modena e Reggio Emilia, Italy |

|

|

|

Bernhard Vogginger TU Dresden, Germany |

Barna Zajzon Forschungszentrum Jülich, Germany |

Thank you for attending the NEST Conference 2025! Looking forward to seeing you next year!

![]()

Previous NEST Conferences

To get an impression of previous virtual NEST Conferences, please visit

Contact

-

-

Registration Zoom

Zoom

-

Welcome & Introduction Zoom

Zoom

Convener: Markus Diesmann -

Keynote Zoom

Zoom

Convener: Markus Diesmann-

1

Full-scale point neuron models of mouse and human hippocampal microcircuits

The mathematical modeling of extended brain microcircuits is becoming an effective tool to simulate the neurophysiological correlates of brain activity while opening new perspectives in understanding the mechanisms underlying brain dysfunctions. The generation of realistic networks is however experiencing limitations due to the strategy adopted to build network connectivity and also to the computational cost associated with biophysically detailed neuronal models.

We have recently developed a method to generate neuronal network scaffolds associating geometrical probability volumes with pre- and postsynaptic neurites. In this talk, I will show that the proposed approach allows to generate neuronal networks with realistic connectivity properties without the explicit use of 3D morphological reconstructions to be adopted for highly efficient simulation through point-like neuron models. The method has been benchmarked both on the mouse and human hippocampus CA1 region and its efficiency at different spatial scales has been explored. The abstract geometric reconstruction of axonal and dendritic occupancy, by effectively reflecting morphological and anatomical constraints, could be integrated into structured simulators generating entire circuits of different brain areas.

Speaker: Daniela Gandolfi (University of Modena and Reggio Emilia)

-

1

-

Talks Zoom

Zoom

-

2

Adult Neurogenesis in the Dentate Gyrus

As a rule, no new neurons are born in the adult mammalian brain. As an exception, however, adult neurogenesis is readily observed in niches such as the dentate gyrus where neuronal precursors are transformed into granule cells. Dentate granule cells constitute a major population of principal cells in the trisynaptic circuit and are implicated in hippocampal functions such as pattern separation. Newborn granule cells exhibit distinctive properties that, as they mature, progressively converge toward those of pre-established mature granule cells. While it has been suspected that the age-dependent properties of adult-born cells contribute to their integration into the network, it is not known how exactly the integration dynamics and hippocampal function are affected by it.

We have developed models where adult-born granule cells undergo experimentally-matched maturation, forming new connections within the pre-existing networks. Our findings suggest that the age-dependent properties are critical for network integration. Analysis suggests that, if large numbers of cells are rapidly added, pathological states resembling epilepsy may emerge. Studying different network configurations further indicates that adult-born neurons compete for synaptic resources with mature ones, consistent with the experimental observation that perforant pathway synapses are redistributed between newborn and mature cells.

Our models provide a promising tool to study the dynamics of adult neurogenesis and how it might affect hippocampal computations. In the talk, I will provide an overview of our modelling approach, and briefly summarise our preliminary results. I will also share the details about our latest model and the practical challenges involved with it.

Speaker: Aadhar Sharma (Bernstein Center Freiburg, University of Freiburg) -

3

A Graph-Based, In-Memory Workflow Library for Brain/MINDS 2.0 – The Digital Brain Project

The Brain/MINDS 2.0 Digital Brain Project aims to develop an open, interoperable software platform dedicated to digital brain construction. This platform targets seamless integration of neuroscience simulation tools—including TVB, NEST, BMTK, —via their Python APIs, allowing researchers to build comprehensive and detailed brain models. As brain modeling and simulation research evolves, there is a growing need for digital infrastructures that can efficiently handle heterogeneous data while supporting scalable simulation workflows.

Current workflow systems like Snakemake and Nextflow are well-suited for linear data-processing pipelines but are inherently dependent on serialized, I/O-bound data exchanges. This makes them less effective for in-memory data structures typical of neural simulations, posing challenges for constructing understandable and reusable workflow modules.

To address this gap, we introduce a graph-based workflow framework where brain modeling tasks are encapsulated as modular, reusable nodes. These nodes communicate using direct memory references, enabling rapid in-memory propagation of complex neural data (e.g., neuron states, connectivity matrices) and eliminating the overhead of serialization and disk I/O. Workflows are defined as node-edge graphs, fostering flexible, composable scientific pipelines.

In addition, to leverage the benefits of traditional tools, our implementation analyzes data exchange patterns to identify optimal process boundaries, grouping tightly coupled in-memory tasks while enabling file-based I/O modularity where appropriate. This hybrid model aims to support distributed workflow execution with Nextflow and Snakemake under the hood, while maintaining the modularity, clarity, ease of use, and reusability needed for scientific users and collaborative research.

Speaker: Carlos Gutierrez (Okinawa Institute of Science and Technology / SoftBank) -

4

Accelerated cortical microcircuit simulations on massively distributed memory

Comprehensive simulation studies of dynamical regimes of cortical networks with realistic synaptic densities depend on compute systems capable of running such models significantly faster than biological real time. Since CPUs still are the primary target for established simulators, an inherent bottleneck caused by the von Neumann design is frequent memory access with minimal compute. Distributed memory architectures, popularized by the need for massively parallel and scalable processing for ML, offer an alternative.

We introduce extensible simulation technology for spiking networks on massively distributed memory using Graphcore's IPUs. We demonstrate the efficiency of the new technology based on simulations of the microcircuit model by (Potjans et al., 2014) commonly used as a reference benchmark. It represents 1~mm² of cortical tissue, and is considered a building block of cortical function. We present a custom communication algorithm especially suited for distributed and constrained memory environments, which allows a controlled trade-off between performance and memory usage. Our simulation code achieves an acceleration factor of 15x compared to real time for the full-scale cortical microcircuit model on the smallest device configuration capable of fitting the model in memory. This is competitive with the current record on a static FPGA cluster (Kauth et al., 2023), and further speedup can be achieved at the cost of lower precision.

With negligible compilation times, the simulation code can be be extended seamlessly to a wide range of synapse and neuron models, as well as structural plasticity, unlocking a new class of models for extensive parameter-space explorations in computational neuroscience.Speaker: Catherine Mia Schöfmann (PGI-15)

-

2

-

Group photo & short break

-

Workshop Zoom

Zoom

-

5

Using NESTML and NEAT to create massively parallel network simulations with dendritic subunits

The brain is a massively parallel computer. In the human brain, 86 billion neurons convert synaptic inputs into action potential (AP) output. Moreover, even at the subcellular level, computations proceed in a massively parallel fashion, e.g. in each dendritic compartment. It is only natural, thus, to use the parallelisation and vectorisation capabilities of modern supercomputers to simulate the brain in a massively parallel fashion.

The NEural Simulation Tool (NEST) is the reference with regards to the massively parallel simulation of spiking network models. We have extended the scope of the NESTML modelling language to support vectorized multi-compartment models, with dendrites featuring user-specified dynamical processes. This allows users to define simple multi-compartmental neuron models through NEST’s PyNest API, capturing key dendritic computations in large network models.

A new complementary tool in the NEST-Initiative, the NEuronal Analysis Toolkit (NEAT), then allows full biophysical models and simplifications thereof to be included in NEST network simulations. This tool provides high-level functionalities for defining biophysically realistic neuron models, and extensive toolchains to simplify these complex models. The resulting simplifications can be exported programmatically to NEST, and thus embedded in network simulations.

In this workshop, we will go over the mechanics of defining and compiling compartmental models using NESTML, of implementing simple compartmental layouts through the PyNEST API, and of creating biophysical models in NEAT, and embedding their simplifications in NEST network simulations. We will present this in the form of an interactive Jupyter notebook tutorial, which will subsequently become part of NEST’s documentation.Speakers: Leander Ewert (IAS-6 Forschungszentrum Jülich), Willem Wybo (Forschungszentrum Jülich)

-

5

-

12:45

Lunch break

-

Workshop Zoom

Zoom

-

6

Power Spectral Analysis of NEST Simulation Using Neo and Elephant

In this introductory level tutorial we will introduce participants to analyzing electrophysiological data from a neural network simulation using the tools Neo (https://neuralensemble.org/neo) for representing and handling the data and Elephant (https://python-elephant.org) for performing the actual analysis. We will cover the basic Neo data objects and how to load simulation outcomes into the framework. We then focus the analysis on obtaining and contrasting power spectral density estimates from spike train data using multiple approaches. For the tutorial, participants will be able to execute tutorials on their laptops with minimal installation effort using the EBRAINS Collaboratory.

Speaker: Michael Denker (Institute for Advanced Simulation (IAS-6), Forschungszentrum Jülich)

-

6

-

15:15

Short break

-

Keynote Zoom

Zoom

-

7

The SpiNNaker2 neuromorphic system for large-scale brain simulation and energy-efficient AI

TU Dresden has recently completed the construction of the world’s largest brain-inspired supercomputer „SpiNNcloud“, which will allow the real-time simulation of up to 5 billion neurons. It is based on the neuromorphic “SpiNNaker2” chip featuring 152 low-power ARM cores and digital accelerators to speed up the processing of spiking and deep neural networks. The overall system comprises a scalable communication infrastructure enabling the low-latency multi-cast routing of event packets such as spikes. All of this makes SpiNNaker2 a unique platform to explore large-scale brain simulation and neuro-inspired AI algorithms.

This talk will introduce the SpiNNaker2 chip and system architecture and explain how spiking neural networks are simulated in real-time. Further, we will show examples of using SpiNNaker2 for efficient event-based AI processing. Finally, we will provide an overview of the software stack (including efforts on supporting NESTML) and give an outlook on the access options for SpiNNaker2.Speaker: Bernhard Vogginger (TU Dresden)

-

7

-

Mingle Gathertown

Gathertown

-

-

-

Keynote Zoom

Zoom

-

8

Signal propagation and denoising through topographic modularity of neural circuits

To navigate a noisy and dynamic environment, the brain must form reliable representations from ambiguous sensory inputs. Since only information that successfully propagates through cortical hierarchies can influence perception and decision-making, preserving signal fidelity across processing stages is essential. This work investigates whether topographic maps — characterized by stimulus-specific pathways that preserve the relative organization of neuronal populations — may serve as a structural scaffold for robust transmission of sensory signals.

Using a large modular circuit of spiking neurons comprising multiple sub-networks, we show that topographic projections are crucial for accurate propagation of stimulus representations. These projections not only maintain representational fidelity but also help the network suppress sensory and intrinsic noise. As input signals pass through the network, topographic precision regulates local E/I balance and effective connectivity, leading to gradual enhancement of internal representations and increased signal-to-noise ratio.

This denoising effect emerges beyond a critical threshold in the sharpness of feedforward connections, giving rise to inhibition-dominated regimes where responses along stimulated pathways are selectively amplified. Our results indicate that this phenomenon is robust and generalizable, largely independent of model specifics. Through mean-field analysis, we further demonstrate that topographic modularity acts as a bifurcation parameter that controls the macroscopic dynamics, and that in biologically constrained networks, such a denoising behavior is contingent on recurrent inhibition.

These findings suggest that topographic maps may universally support denoising and selective routing in neural systems, enabling behaviorally relevant regimes such as stable multi-stimulus representations, winner-take-all selection, and metastable dynamics associated with flexible behavior.Speaker: Barna Zajzon (IAS-6, Forschungszentrum Jülich)

-

8

-

Workshop Zoom

Zoom

-

9

Rapid prototyping in spiking neural network modeling with NESTML and NEST Desktop

NESTML is a domain-specific modeling language for neuron models and synaptic plasticity rules[1]. It is designed to support researchers in computational neuroscience to specify models in a precise and intuitive way. These models can subsequently be used in dynamical simulations of spiking neural networks on several platforms, particularly the NEST Simulator[2]. However, specification of the network architecture requires using the NEST Python API, which requires users to have programming skills. This poses a problem for beginners with little to no coding experience.

This issue is addressed by developing NEST Desktop[3], a graphical user interface (GUI) that serves as an intuitive, programming-free interface to NEST. The interface is easily installed and accessed via an internet browser on laptops or in cloud-based deployments.

Here, we demonstrate the integration of all three components: NEST Desktop, NESTML, and NEST. As a result, researchers and students can customize existing models or develop new ones using NESTML and have them instantly available to create networks using the GUI of NEST Desktop before simulating them using NEST. In this workshop, we will first demonstrate the simulation of a balanced network in NEST Desktop using a built-in integrate and fire neuron model. As a second step, we will create a new customised neuron model with spike-frequency adaptation (SFA) in NESTML from within the NEST Desktop interface, and use this model to simulate the balanced network. The effects of SFA on network dynamics will then be studied inside the NEST Desktop GUI through simulation data visualization and analysis tools.

Speakers: Charl Linssen (Simulation Lab Neuroscience, Forschungszentrum Jülich GmbH), Ms Pooja Babu (Simulation & Data Lab Neuroscience, Institute for Advanced Simulation, Jülich Supercomputing Centre (JSC), Forschungszentrum Jülich, Germany)

-

9

-

11:15

Short break

-

Talks Zoom

Zoom

-

10

PyNEST NG: A new modern interface to the NEST kernel

SLI, the Simulation Language Interpreter, has been a defining feature of NEST since its beginning and its primary user interface [1]. Since the introduction of PyNEST with the first public beta of NEST 2.0 in 2008 [2], users have increasingly switched from SLI to PyNEST, to a point where today only few users and developers are fluent in SLI or familiar with the interpreters C++-implementation. We thus decided several years ago to remove the SLI interpreter from the NEST simulator and connect PyNEST directly with a NEST C++ API.

A key challenge to removing the SLI interpreter from NEST was the DictionaryDatum data structure provided by SLI. It is central to data exchange between user and simulator and thus pervades much of NEST kernel and model code. As early as 2018, Jochen Eppler and Håkon Mørk began work on a "SLI-free" NEST, including a replacement for the SLI dictionary data structure. Nicolai Haug and Hans Ekkehard Plesser contributed at later stages. One of the last stumbling blocks to be resolved was an efficient approach to dictionary access checks, which is crucial to detect misspelled parameter names.

The other major challenge in removing the SLI interpreter was to port the hundreds of tests for NEST written in SLI to PyTest. This was undertaken as a broad community effort during several hackathons and is not yet entirely completed. This work is done in the NEST master branch to immediately make the ported tests available.

At present, PyNEST NG is essentially complete, with benchmark tests on various complex models indicating good, for some models notably reduced, model construction times. Once the remaining test will have been ported from SLI to PyTest, PyNEST NG will be ready for integration into NEST. This will only minimally affect the user interface.

In my talk, I will give an overview over the implementation of NESTs new Python interface, benefits for users and developers, and discuss challenges ahead.Acknowledgements

We are grateful to our many colleagues in the NEST developer community who contributed to the work towards PyNEST NG, in particular by porting tests, and to Renan Shimoura for benchmarking PyNEST NG against the clustered MAM model and to Markus Diesmann for co-piloting a major merge of the master branch into PyNEST NG after 15 months of inactivity. Research reported here was supported by the European Union’s Horizon 2020 Framework Programme for Research and Innovation under Specific Grant Agreements No. 785907 (Human Brain Project SGA2) and No. 945539 (Human Brain Project SGA3).

References

[1] Diesmann, M., Gewaltig, M.-O., & Aertsen, A. (1995). SYNOD: An Environment for Neural Systems Simulation—Language Interface and Tutorial (Technical Report GC-AA-/95-3; p. 72). Weizman Institute of Science.

[2] Eppler, J. M., Helias, M., Muller, E., Diesmann, M., & Gewaltig, M.-O. (2008). PyNEST: A convenient interface to the NEST simulator. Front Neuroinformatics, 2, 12. https://doi.org/10.3389/neuro.11.012.2008Speaker: Hans Ekkehard Plesser (Norwegian University of Life Sciences) -

11

A NEST-based framework for the parallel simulation of networks of compartmental models with customizable subcellular dynamics

The human brain computes in a massively parallel fashion, not only at the level of the neurons, but also through complex subcellular signaling networks which support learning and memory. Therefore, it’s desirable to utilize the parallelization capabilities of modern supercomputers to simulate the brain in a massively parallel fashion. The NEural Simulation Tool (NEST) [1] enables massively parallel spiking network simulations, being optimized to efficiently communicate spikes across MPI processes [2]. However, so far, NEST had limited options to simulate subcellular processes as part of the network. We have extended the NESTML modeling language [3] to support multi-compartment models, featuring user-specified dynamical processes (Fig 1A-C). These dynamics are compiled into NEST models, which optimally leverage CPU vectorization. This adds a deeper, subcellular level of parallelization, allowing individual cores to parallelize multiple compartments. Furthermore, we leverage the Hines algorithm [4] to achieve stable and efficient integration of the system. Overall we gain single-neuron speedups compared to the field-standard NEURON simulator [5] of up to a factor of four to five (Fig 1D). Thus, we enable embedding user-specified dynamical processes in large-scale networks, representing (i) ion channels, (ii) synaptic receptors that may be subject to a-priori arbitrary plasticity processes, or (iii) slow processes describing molecular signaling or ion concentration dynamics. With the present work, we facilitate the creation and efficient, distributed simulations of such networks, thus supporting the investigation of the role of dendritic processes in network-level computations involving learning and memory.

Speakers: Leander Ewert (IAS-6 Forschungszentrum Jülich), Willem Wybo (Forschungszentrum Jülich) -

12

Modeling Calcium-Mediated Spike-Timing Dependent Plasticity in Spiking Neural Networks

This study translates the model of Chindemi et al. on calcium-dependent neocortical plasticity into a spiking neural network framework. Building on their work, we implemented a computationally efficient model comprising a point neuron and synapse model, using NESTML. Our approach combines the Hill-Tononi (HT) neuron, which features detailed NMDA and AMPA conductance dynamics, with the Tsodyks-Markram (TM) stochastic synapse, which controls vesicle release probability. We extended these components to create a comprehensive framework that captures the relationship between calcium dynamics and spike-timing dependent plasticity while maintaining computational efficiency for large-scale network simulations. Both our model and Chindemi’s rely on the assumption that calcium-dependent processes following paired pre- and post-synaptic activity influence synaptic efficacy on both sides of the synapse: by modifying the maximum AMPA conductance (GAMPA) at the post-synaptic site and the release probability (USE) at the synapse. We validated our implementation through a series of experiments: first confirming the functionality of the TM synapse model paired with HT neuron modifications to account for calcium currents, then testing isolated pre- and post-synaptic activations, generating NMDA and VDCC calcium currents respectively. Finally, we examined paired pre-post stimulation at varying time intervals. Our results successfully replicate Chindemi’s findings obtained with more complex multicompartmental models, also assessing plasticity outcomes according to the distance of the synaptic input to the soma, as experimental evidence shown by P. J. Sjöström and M. Haüsser. This work bridges neuronal activity patterns and synaptic modifications underlying learning and memory.

Speaker: Carlo Andrea Sartori (Politecnico di Milano)

-

10

-

12:30

Lunch break

-

Talks Zoom

Zoom

-

13

Towards an empirically based model of the prefrontal cortex using AdEx neurons and NEST

We present the development of a biologically grounded spiking neuronal network model of the prefrontal cortex (PFC), implemented in the NEST simulator using the adaptive exponential integrate-and-fire (AdEx) neuron model. Based on the architecture proposed by Hass et al. (2016), our model replaces the simplified AdEx (simpAdEx) neurons used in their study with the full AdEx model, thereby expanding the dynamical repertoire of individual neurons while preserving the original network topology.

The network comprises 1,000 neurons distributed across two excitatory and eight inhibitory populations, spanning cortical layers 2/3 and 5. Implementation is carried out in PyNEST, with neuron and synapse models defined in NESTML. The model supports AMPA, NMDA, and GABAergic synapses, each described by double-exponential kinetics, and includes a 30\% synaptic transmission failure rate. Synaptic weights and delays are sampled from log-normal and normal distributions, respectively. Connectivity is defined by population-specific connection probabilities, and synapses follow the Tsodyks-Markram (2002) short-term plasticity dynamics.

The goal is to provide a robust, data-driven, and openly accessible model of PFC circuitry to the NEST community. Preliminary simulations show that the network produces realistic membrane potential traces and supports asynchronous-irregular firing states consistent with in vivo cortical activity. This model offers a flexible platform for exploring prefrontal cortical dynamics and serves as a foundation for investigating both physiological and pathological states in large-scale simulations.

Speaker: Pedro Ribeiro Pinheiro (Universidade de São Paulo)

-

13

-

Keynote Zoom

Zoom

-

14

Astroglia in Neural Circuits: Modeling Synaptic and Network Modulation

Astroglia, or astrocytes, are a major class of glial cells in the central nervous system. Long considered passive support elements, they are now widely recognized as active and essential components of neural processing. Astroglia engage in complex, bidirectional communication with neurons [1], responding to neuronal input and modulating synaptic activity through diverse molecular and cellular mechanisms. They play crucial roles in regulating extracellular ion concentrations, clearing neurotransmitters, providing metabolic support, and contributing to the formation, maintenance, and remodeling of synapses. These functions establish astroglia as critical regulators of neural circuit dynamics and overall brain function, both under normal physiological conditions and in the context of neurological disorders. To better understand the complex nature of neuron–astroglia interactions in the brain, computational modeling of these processes is essential [2].

In this talk, I will review the main types of neuronal input signals to astroglia and describe the key astroglial mechanisms at both molecular and cellular levels that drive their functional outputs. I will then discuss the consequences of these outputs on brain activity, highlighting their relevance to brain function in health and disease. Furthermore, I will present some of our recent work in developing computational modeling methodologies, including new models and tools aimed at capturing astroglial influence on synaptic [3] and network dynamics [4], with particular emphasis on our astroglia implementations in the NEST simulation platform [5,6]. Finally, I will discuss how astroglial activity may shape brain functions such as synaptic transmission, network excitability, local and global network synchronization, long-distance signal propagation, and, ultimately, learning and memory in the brain.

Speaker: Marja-Leena Linne (Tampere University, Finland)

-

14

-

Group photo & short break

-

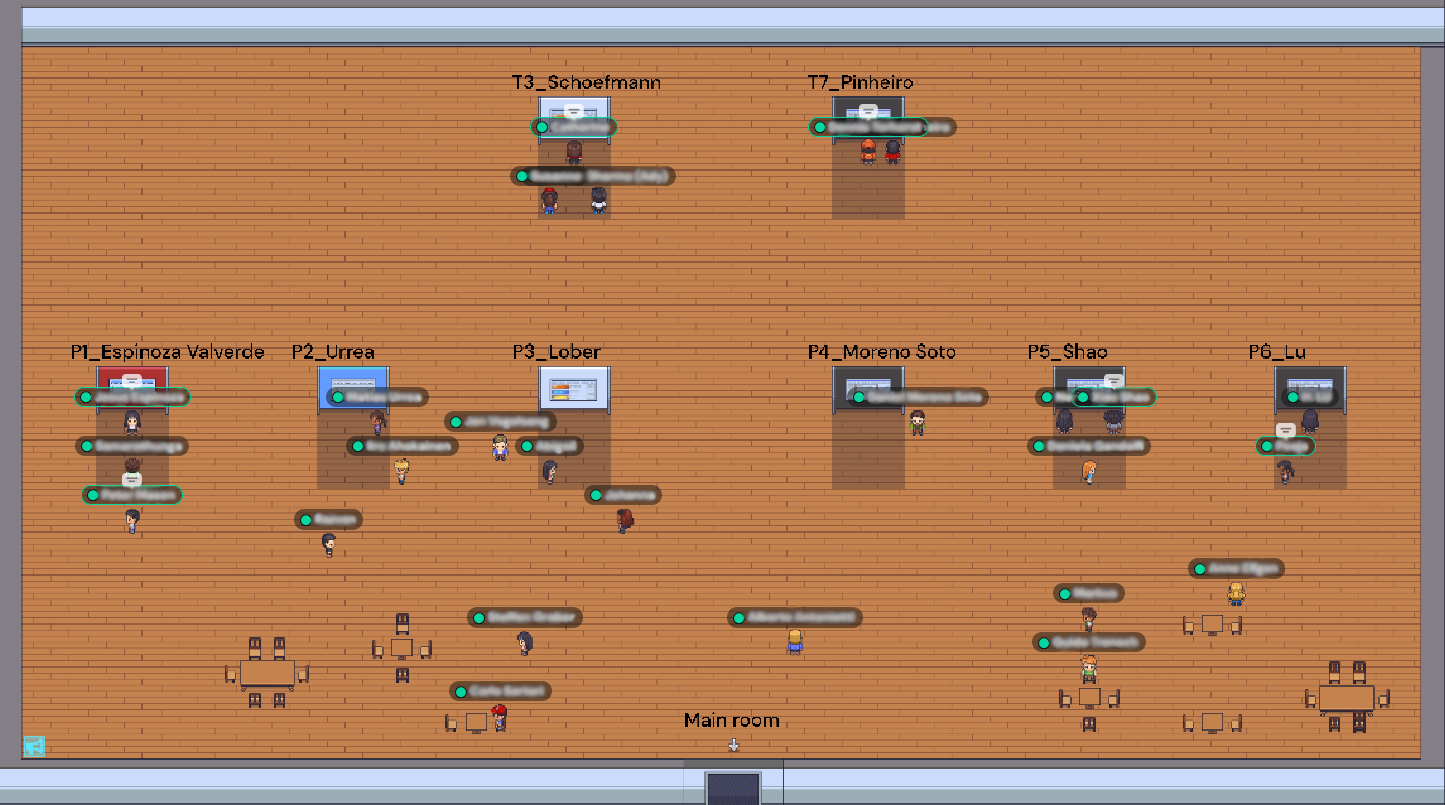

Posters Gathertown

Gathertown

Conveners: Daniel Moreno Soto (Department of Physics, Faculty 1, RWTH Aachen University, Germany. Institute for Advanced Simulation (IAS-6), Jülich Research Centre, Germany.), Han Lu (Institute for Advanced Simulation (IAS), Jülich Supercomputing Center, Forschungszentrum Jülich), Melissa Lober, Samuel Madariaga (Universidad de Chile), Xiao Shao, Jesus Andres Espinoza Valverde (School of Mathematics and Natural Sciences, Bergische Universität Wuppertal, Wuppertal, Germany) -

Wrap-up Gathertown

Gathertown

Convener: Abigail Morrison (INM-6 Forschungszentrum Jülich) -

Mingle Gathertown

Gathertown

-